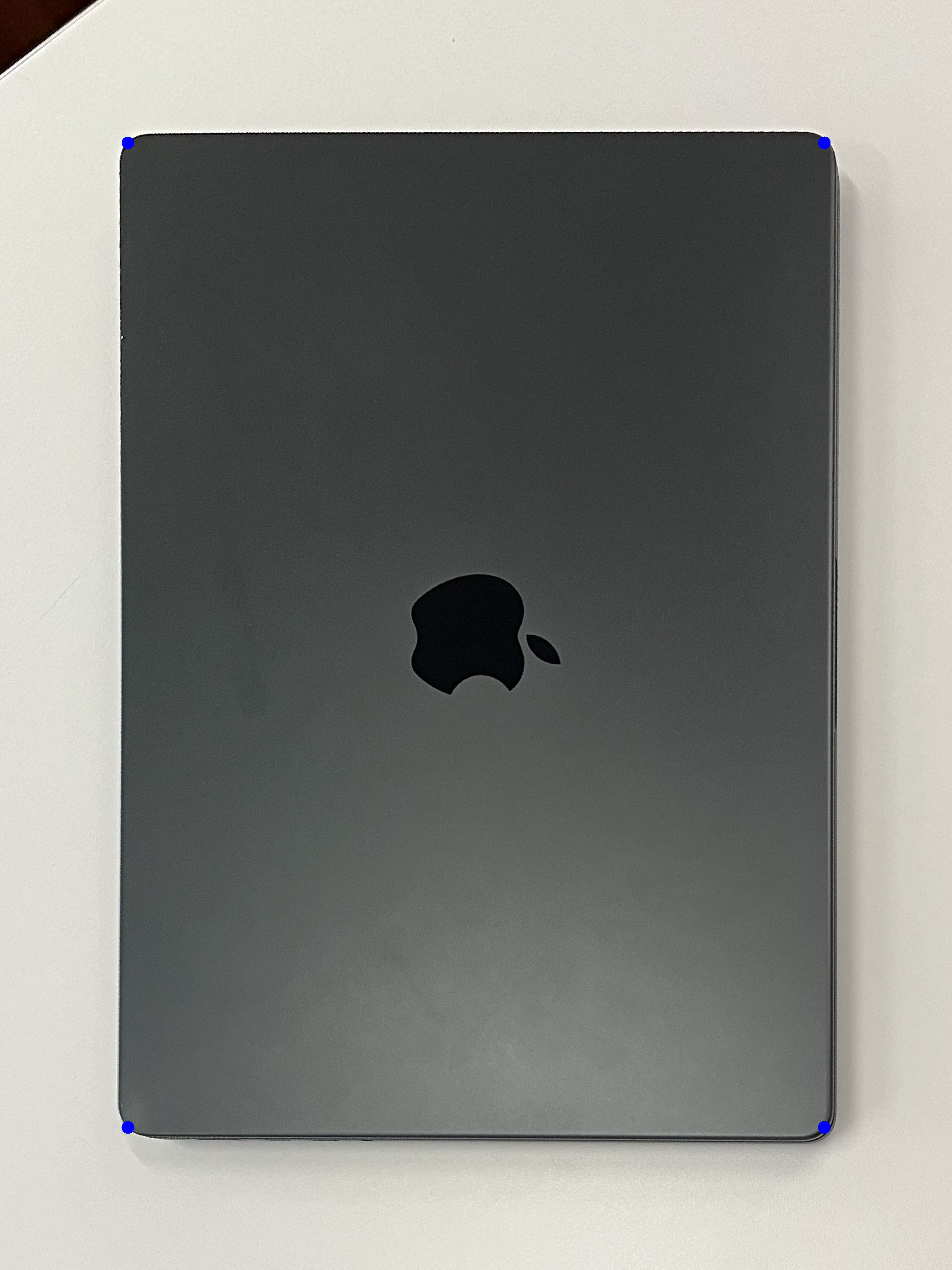

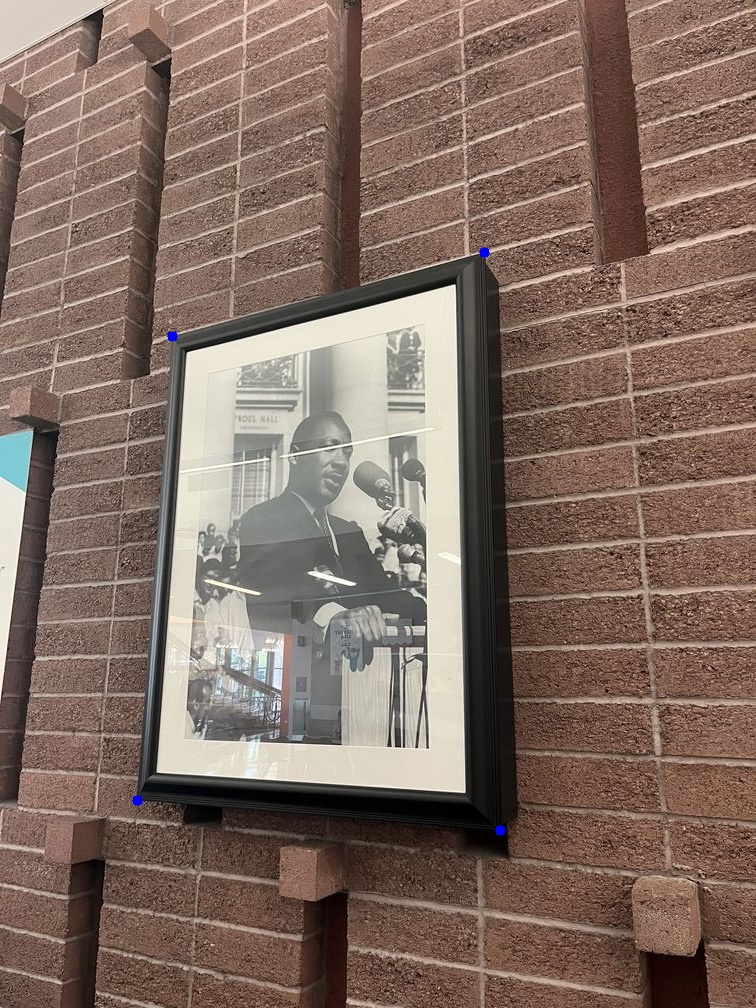

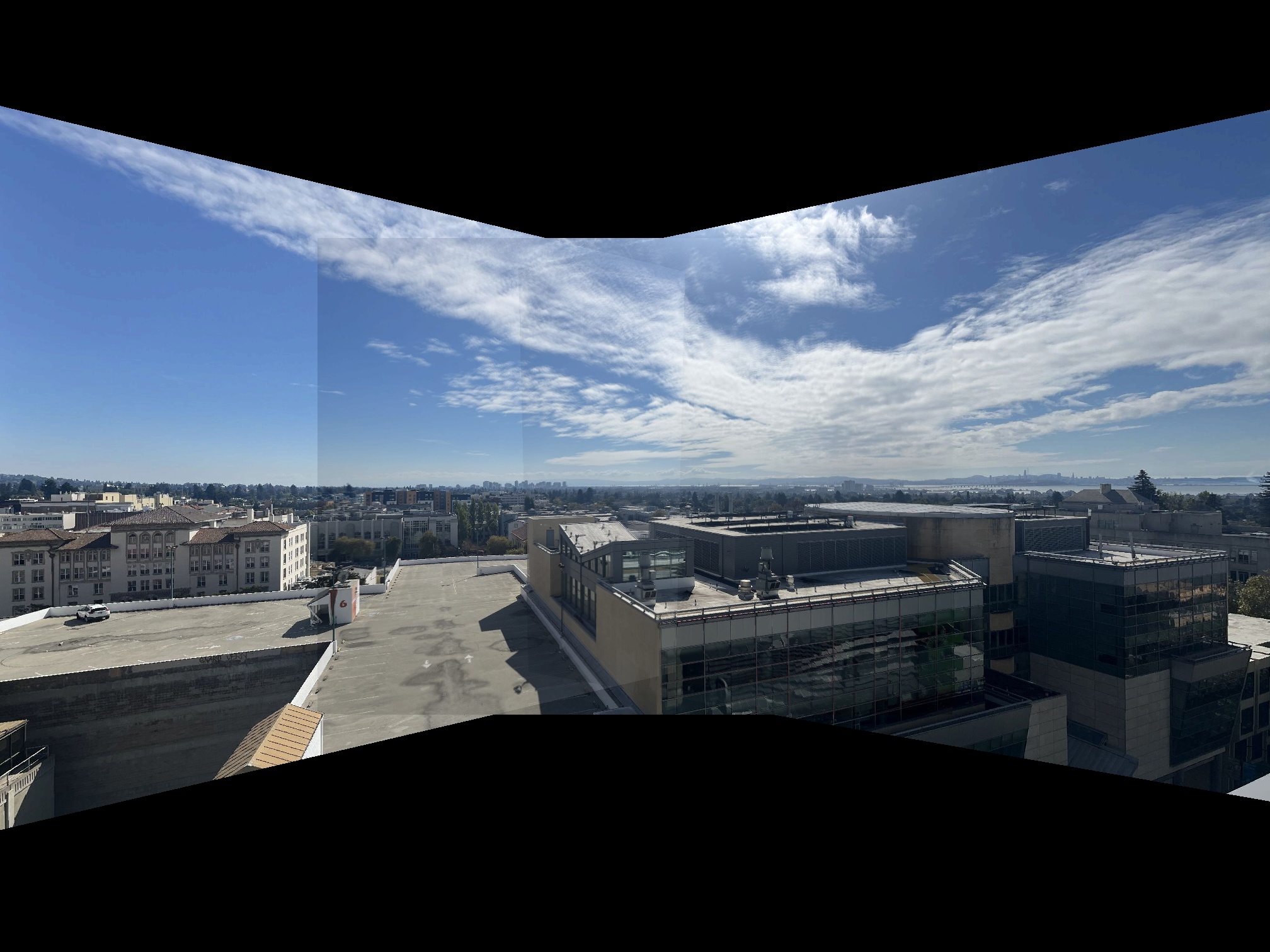

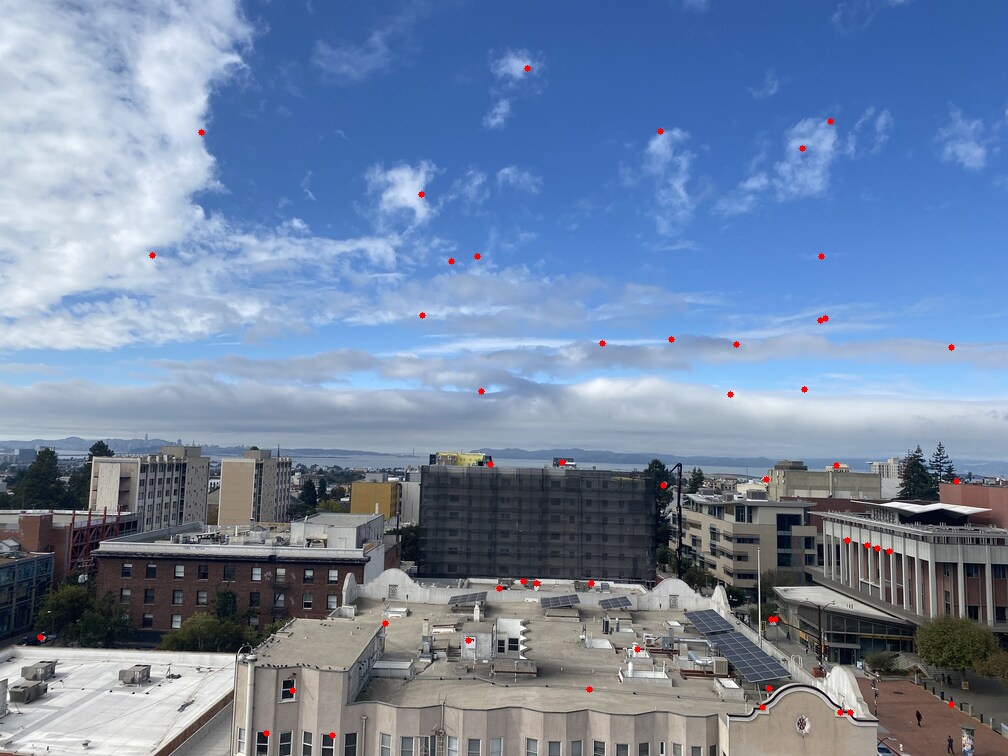

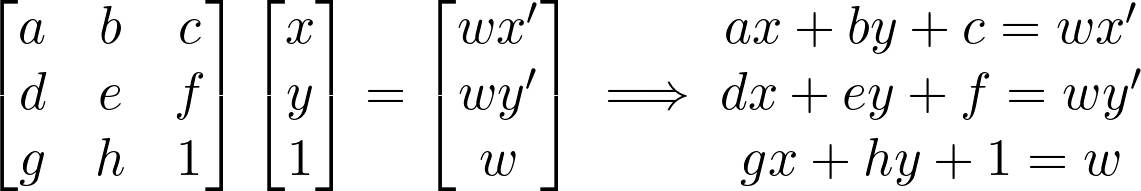

For us to be able to warp our images -- and ultimately blend images to create a mosaic -- we need to know how to compute our homography matrix. We essentially want to map points p (from our input image) to p' (our output image) using the homography matrix H. This is done by taking the homogenous coordinates and doing a matrix multiplication p' = Hp.

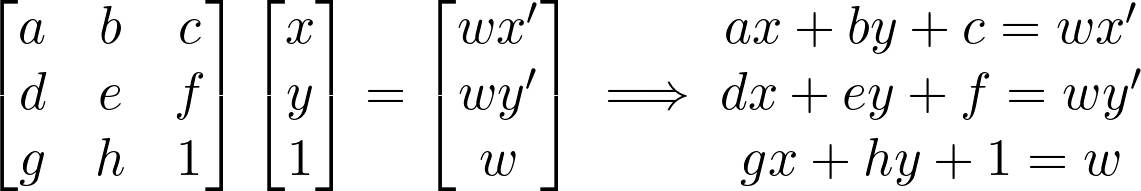

Given points (x, y) and (x', y'), we have the following:

Left: Hp = p'; Right: matrix multiplication expanded out to a system of equations

Our goal is to solve for the 8 unknowns in H. Since there are 8 unknowns, we need at least 4 pairs of points to solve for H. We can do this by stacking all of our equations into a matrix A, a vector x, and a vector b. A is a 2n x 8 matrix, and x and b are 2n x 1 vectors, where n is the number of correspondence points we annotate our images with. A and b will consist of our knowns, and x is our unknowns that we will then use to construct H. Rearranging the equation from above to eliminate w, putting it into matrix multiplication, and generalizing from 1 point to n different points, we get the following:

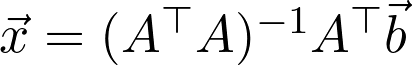

Now, we have a system in the form of Ax = b, where we know the values in A and b and need to solve for x. Using least squares, we can solve for x:

From here, we use the values we compute in x to reconstruct H, and we are done! I've implemented this logic in a method called computeH. From here, I can call H = computeH(im1_pts,im2_pts) and use H where necessary.